Defining the problem

Surveys have always played an integral role within most LMS applications. Collecting course feedback and sentiment from learners and/or instructors helps managers and administrators shape their business practices and course content. In the case of Meridian LMS, an e-commerce component of our application meant that many learners also happened to be paying customers looking for on-demand courses. For us, this meant that surveys were even more mission-critical.

A sustained round of discovery with our customers revealed some important information about this legacy survey system. The process of creating and managing surveys had become cumbersome and confusing. Required components for creating surveys were isolated from one another—sometimes housed in completely separate applications.

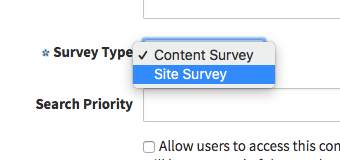

Another weak point our customers identified was that surveys were not portable. There were no standalone surveys capable of supporting multiple use cases. In fact, there were various “types” of surveys that forced customers to build for specific purposes. Once set up, that purpose could not be changed.

Those surveys set up to be part of a course or some other piece of content were considered to be “Content Surveys,” and could not exist outside of the content they “belonged to.”

“Site Surveys,” on the other hand, could exist independently of other content. They could be discovered in the LMS catalog via search or simply browsing. It was truly a stand-alone survey.

Beyond the confusion caused by survey types was a complicated and disjointed process of actually compiling and collecting the various ingredients required in order to build a survey.

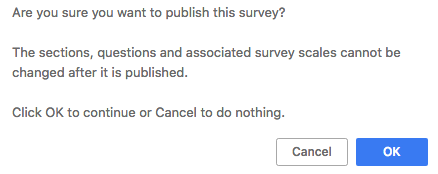

After jumping through several hoops in different areas of multiple applications, imagine being greeted with a message like this when it came time to publish your brand new survey:

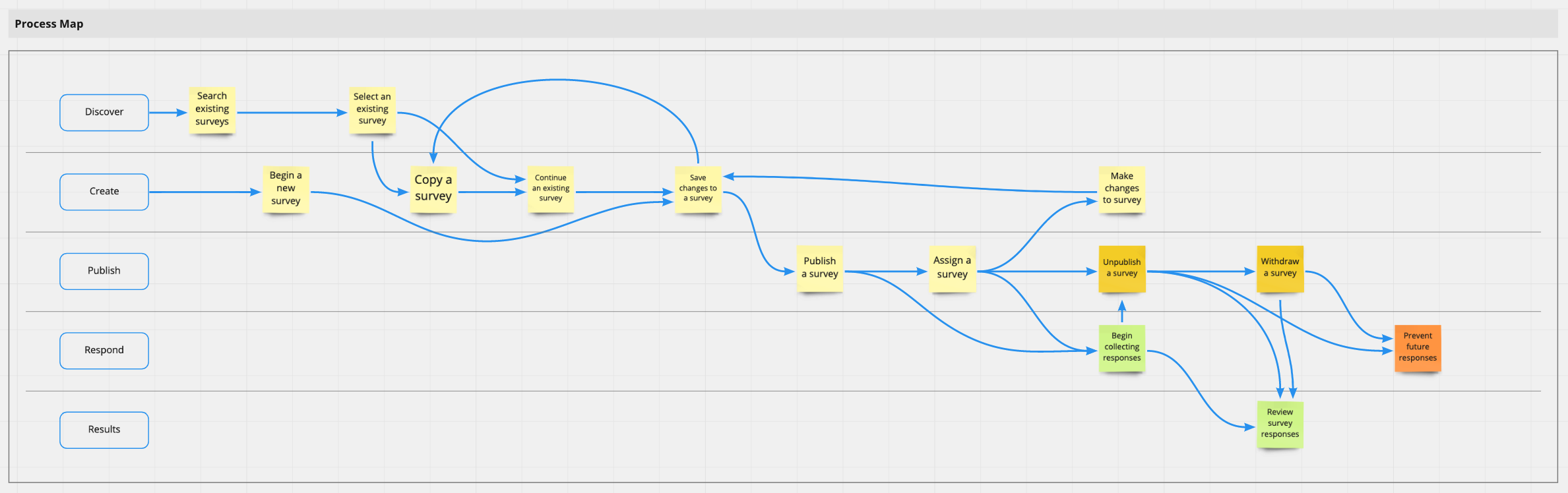

Getting a survey published, though a major accomplishment, represented a small task when compared with hunting down the results of said survey. As we started to map out this workflow, it was difficult to not be overwhelmed by the process.

- Navigate to the Reporting Console in the application's “Admin” area.

- Use the search function to find an existing report called “Survey Statistics.”

- Select the “Survey Statistics” report from your search results.

- Once open, use ANOTHER search function to find the exact survey — assuming you could remember the name of it.

- Select the survey you were looking for from your search results in order to associate your desired survey with the “Survey Statistics” report.

- Once back in your report with the desired survey chosen, set a date range if you'd like to limit the results to a specific time frame (or don't if it doesn't matter…either way, you would still have to go through this step).

- Launch your report, which would then open in a new popup window.

Addressing customer needs

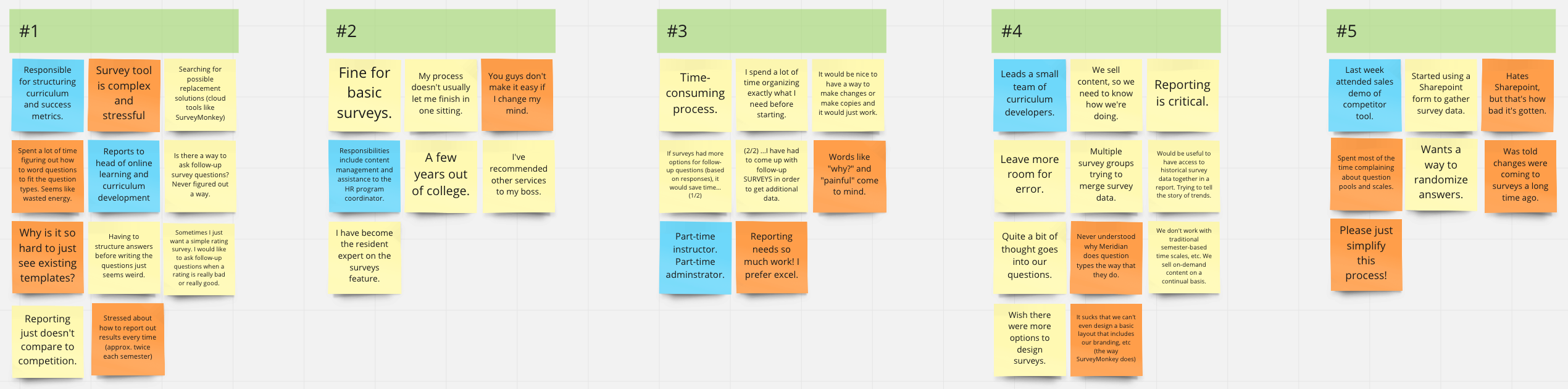

As we concluded our initial round of discovery with customers, a clear picture began to emerge.

The customers, in this case, comprised three key personas:

- content creators and administrators,

- instructors, and

- facilitators (typically stand-ins or assistants to both instructors or content administrators).

Our customers were pretty clear about the primary points we needed to hit in order for this design iteration to be considered a success.

- Surveys had to be easier to create.

- Surveys had to be flexible enough to either stand alone or exist to supplement other content.

- Surveys had to look beautiful.

- Question types needed to be expanded.

- Existing surveys had to be migrated without loss of data integrity.

- Branching logic was required in order to be able to direct users down varying paths based on customer responses.

- The reporting experience needed to improve…a LOT.

Examining the competitive landscape

We then explored some of the commonly-known web-based survey applications, cataloging what made them simple and easy to use; or, in the case where they were problematic, which elements represented a usability challenge. As I compared these already-existing tools — many of which our existing clients had opted (or threatened) to use instead of our survey feature, I recognized several elements that seemed to be “industry-standard”:

- the ability to select a question type at any point before, during or after creating a question;

- the ability to quickly add answers from within the “create/edit question” workflow — not having to leave one workflow only to navigate to another part of the application to then create the missing element separately, simply because it is a reusable/shareable element; and

- The option to shape a user's journey through a survey as a result of their ongoing answers — something we call adaptive paths in the educational technology industry — was usually available, at least in some cursory form.

Building a more perfect mousetrap

Addressing customer use cases

As the next iteration of surveys began to take shape, the team worked to isolate and annotate each process. Many sub-processes also had to be accounted for, including

- creating new question types,

- switching question types,

- adding/removing response options,

- re-using questions and responses,

- grouping questions and responses, and

- branching logic based on responses.

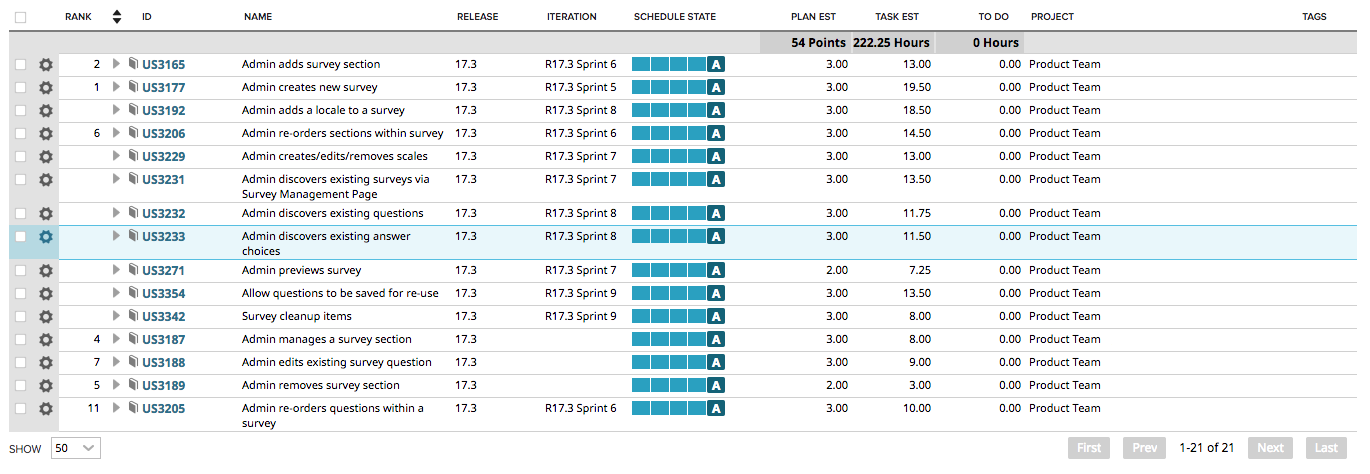

Our confidence grew as we validated our understanding of these processes with our customers, and we worked concurrently with our stakeholders and engineers to flesh out and groom the product backlog.

Shaping the solution

After a few short iterations that centered on some key innovations we wanted to bring to the surveys feature, prototypes of a much-improved solution began to materialize. Soon after, the development began on a new generation of the survey tool, giving customers some key experience upgrades.

Rallying around successes

With the new and improved surveys feature in the wild, we could finally take a breath and start to take stock of some exciting advancements we were able to bring to a very eager crowd of customers.

-

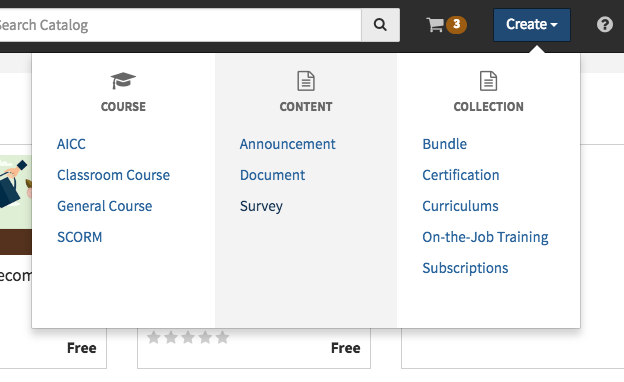

The surveys feature was completely extricated from the legacy administration console. This meant that surveys were now presented along with the many other content creation options in the “quick create” menu. Additionally, this feature carve out brought us one step closer to being able to sunset the legacy administration system entirely.

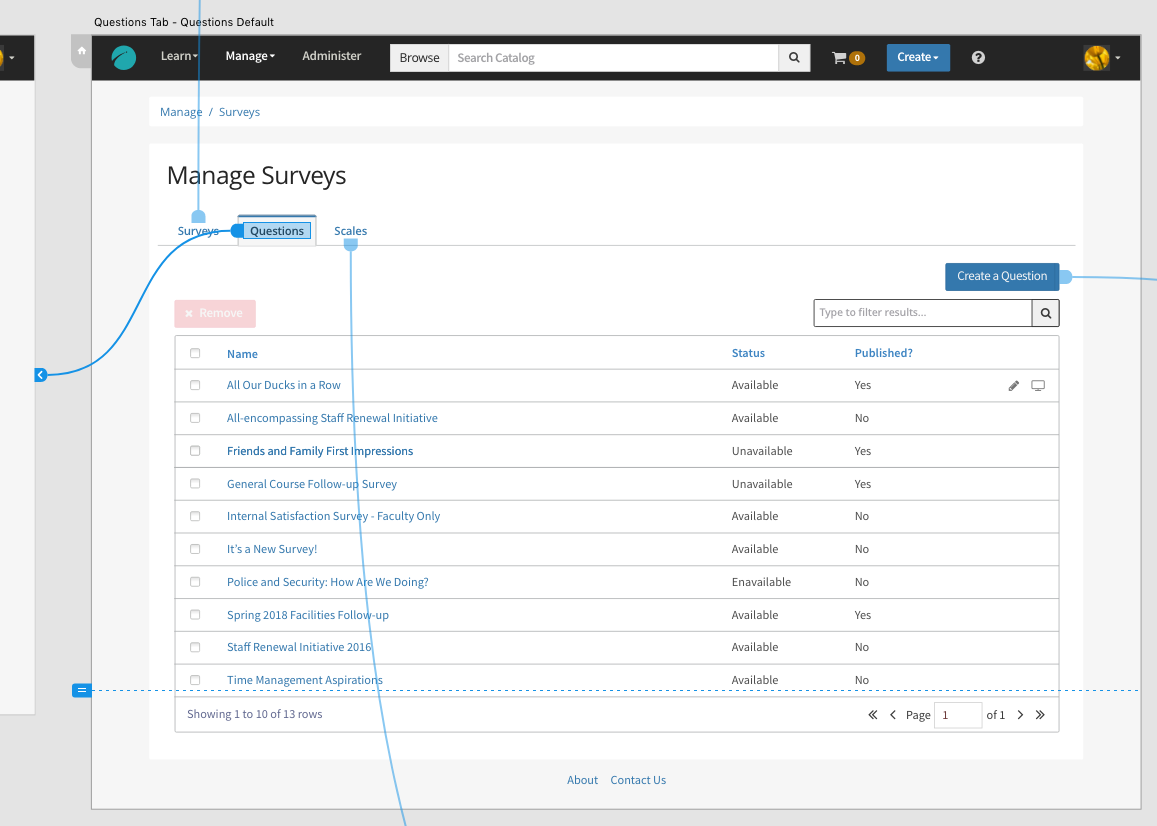

The "quick create" menu, now featuring surveys. - Navigating to the surveys feature started with an automatically-curated listing of existing surveys, making discovery by browsing a breeze. A prominent and friendly search option was available in order to quickly discover any surveys.

- Switching from browsing surveys to browsing existing questions or answers was as easy as switching tabs. This was a dramatic shift from the previous experience, and customers were very excited at the prospect of no longer needing to navigate separate content systems to manage these assets.

- Adding branching logic was now not only possible, but simple and delightful.

- Building surveys now featured advanced, yet easy-to-use in-line edit functionality that never required the creator to leave their workflow.

- Saving surveys that were in the process of being created became a breeze, and finding them again later was as simple as navigating to the surveys feature.

- Surveys were now fully self-contained content objects, free to be associated with any eligible content container — such as courses or curriculums, or could simply stand alone and be linked to from anywhere.

- The underlying code for the surveys feature was was brought into full WCAG 2.0 (AA) compliance — both for content creators as well as those taking the surveys.

- The experience of taking surveys on a mobile device was simple and compelling, offering maximum flexibility for our administrators to be able to collect their crucial data.

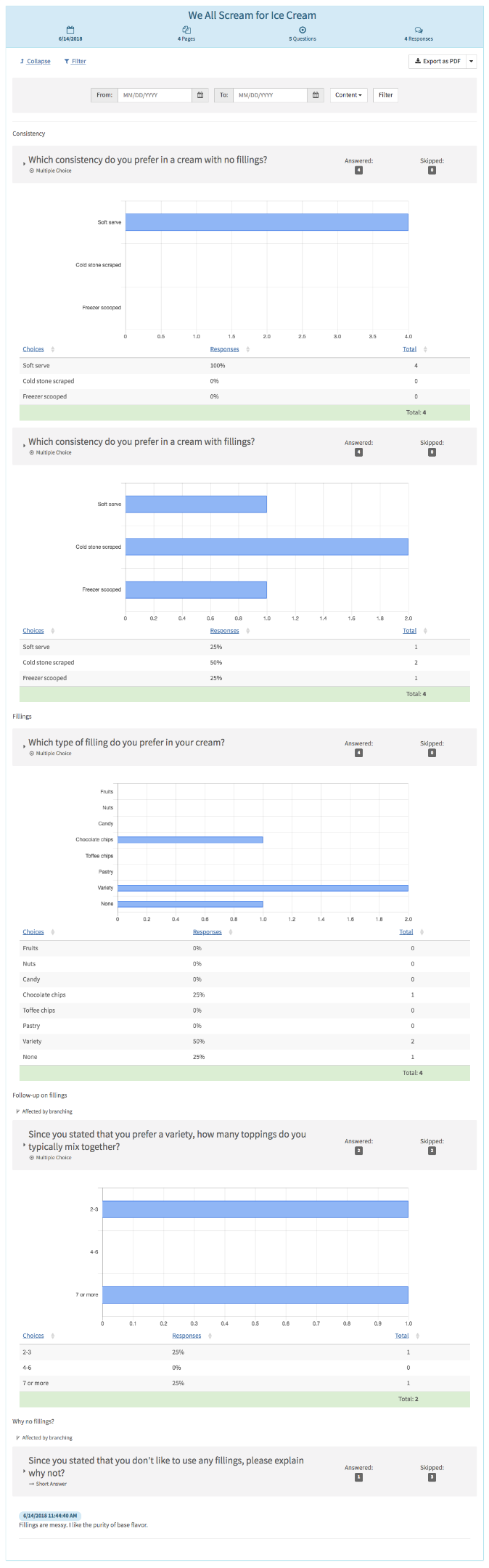

- Navigating survey results was a completely re-imagined experience, featuring real-time results with prominently displayed recent responses and bold visualizations.

The new Surveys experience represented a great leap ahead for what was once a problematic and under-utilized feature. With this iteration, we ushered in a new era of utility for surveys, poised to scale smoothly with access to even more question types and more complex branching logic.

Response to these upgrades was very positive. Customers found this integrated surveys solution to be a highly effective fit for their needs. Follow-up efforts included improvements to courses, allowing for assignment of course pre-work that included surveys, giving instructors yet another tool to help them craft their curriculum and methodology.